Large Language Models (LLMs) have changed the way we interact with technology. From AI powered chat assistants to enterprise grade copilots, they can generate human-like responses, automate tasks, and analyze unstructured data. But there’s one thing they often lack: real-world context.

To truly be useful, LLMs need structured access to data from tools like Slack, CRMs, internal APIs, and file systems. Traditionally, this means creating individual integrations for each tool; a process that’s time consuming, hard to scale, and difficult to maintain.That’s where the Model Context Protocol (MCP) steps in.

# What is MCP?

Model Context Protocol (MCP) is an open standard designed to bridge the gap between LLMs and real-world systems. It provides a unified, consistent, and secure way for AI models to interact with external tools, services, and data sources.

Think of MCP as the USB-C port for AI. Instead of building a custom integration for every new tool, MCP gives you one standard way to connect them all to your LLM.

# Why is MCP Needed?

Building AI that understands your tools requires more than just a good model. It requires seamless communication between the LLM and external systems.

Without MCP:

- You build separate connectors for each tool

- You manage API formats, auth flows, and response handling for each system

- You face challenges in maintaining scalability and consistency

With MCP:

- You follow one protocol for all connections

- You maintain a modular, reusable architecture

- You give your LLM a structured context with less effort and more security

# MCP Architecture

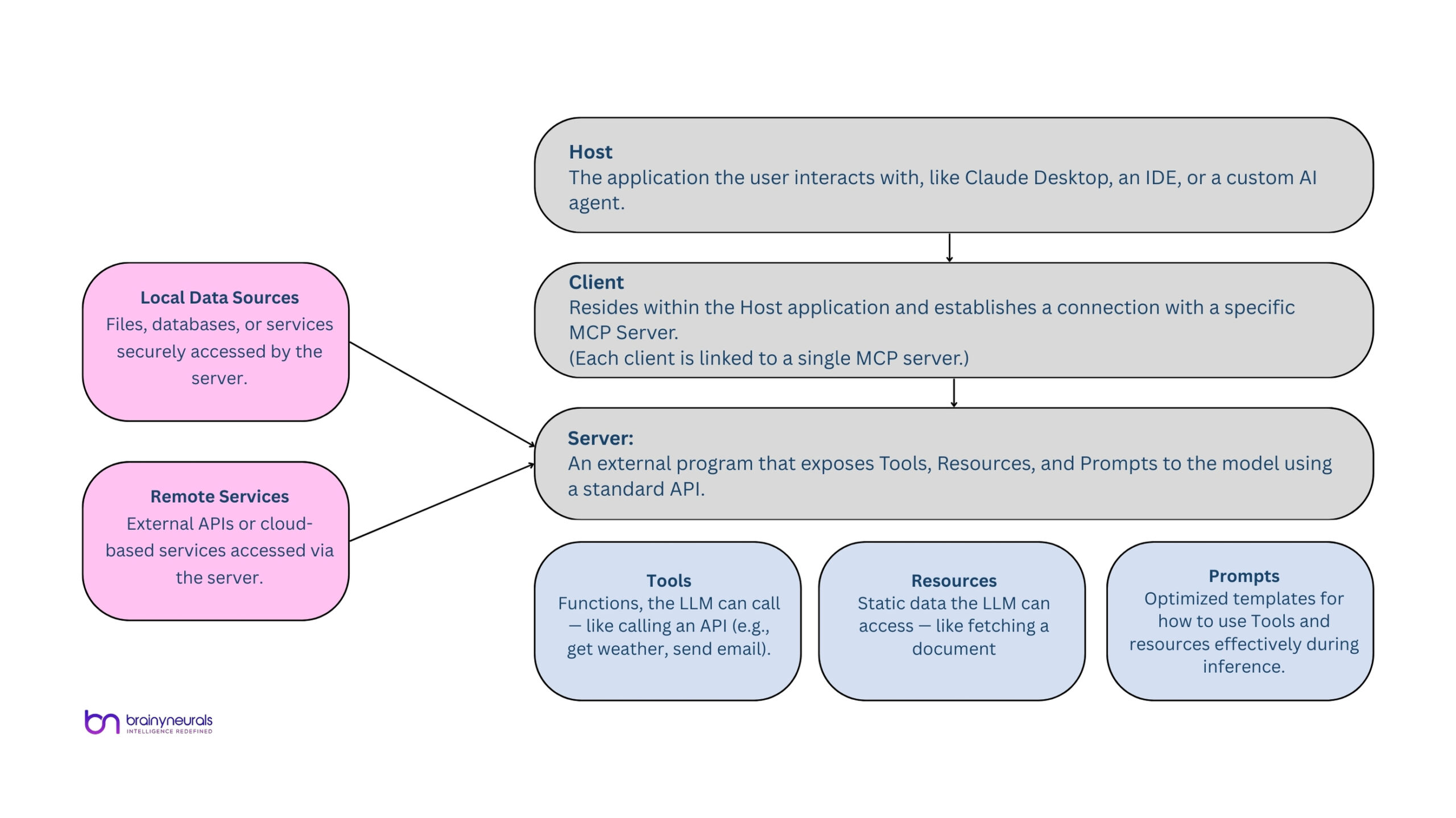

MCP is built around a clear client-server architecture that supports modular, scalable AI systems:

- MCP Hosts: Applications like Claude Desktop, AI-driven IDEs, or custom agents that need access to external tools and data.

- MCP Clients: Reside within Hosts and establish one-to-one connections with specific MCP Servers.

- MCP Servers: Lightweight external programs that expose Tools, Resources, and Prompts through a standard API. They connect to both local and remote data sources.

Components of MCP Servers

- Tools (Model-controlled): Functions the LLM can call to perform tasks, like accessing a weather API.

- Resources (Application-controlled): Static data the LLM can read, like documents or database records.

- Prompts (User-controlled): Predefined templates that guide how tools and resources are used effectively.

- Local Data Sources: Files, databases, or services securely accessed by the server.

- Remote Services: External APIs or cloud-based services accessed via the server.

MCP coordinates the flow of data and instructions between the AI model and external systems.

# How MCP Works (Simplified Flow)

- Start – The Host app creates clients.

- Discover – Client asks the server for its capabilities (tools, resources, prompts).

- Prepare – Host shares tools and data with the LLM in a structured format.

- Request – LLM decides it needs a tool or data and sends a request.

- Execute – Server performs the task (like calling an API).

- Respond – Server sends back the result.

- Complete – Host gives the result to the LLM to generate a response.

Benefits of MCP

- Standardization: One protocol to rule them all , easy to plug and play.

- Scalability: Easily extend your assistant’s reach without rewriting connectors.

- Modularity: Clean separation of concerns : Hosts, Clients, and Servers all evolve independently.

- Security: Defined connection boundaries and lightweight control.

- Interoperability: Works across environments and LLM vendors.

# Where MCP Can Help: Real-World Use Cases

- AI assistants that retrieve data from internal systems

- Developer copilots who access repo issues or test environments

- Internal bots that summarize documents or generate reports

- Enterprise agents that act across departments with real-time data

# Traditional APIs vs. MCP: Choosing the Right Approach

Traditional APIs may be a better fit if your use case demands precise, controlled, and highly predictable behavior. MCP is ideal for scenarios that require flexibility, context awareness, and dynamic interactions like AI assistants and multitool agents , but may be less suited for deterministic systems that require strict limits.

Conclusion

Model Context Protocol is more than just a technical specification — it’s a foundational shift in how AI systems can be built. Standardizing the connection between LLMs and tools enables AI that is not just smart but truly useful.

At Brainy Neurals, we believe in building AI that doesn’t just generate smart text but understands your tools, your data, and your goals. As an AI development company, with our conversation AI service we specialize in building intelligent chatbots and assistants using large language models (LLMs). We implement open standards like MCP to create smarter, integrated solutions that can seamlessly connect with business tools, provide real-time context, and drive productivity. Our goal is to help clients build AI systems that are context-rich, maintainable, and future-ready.